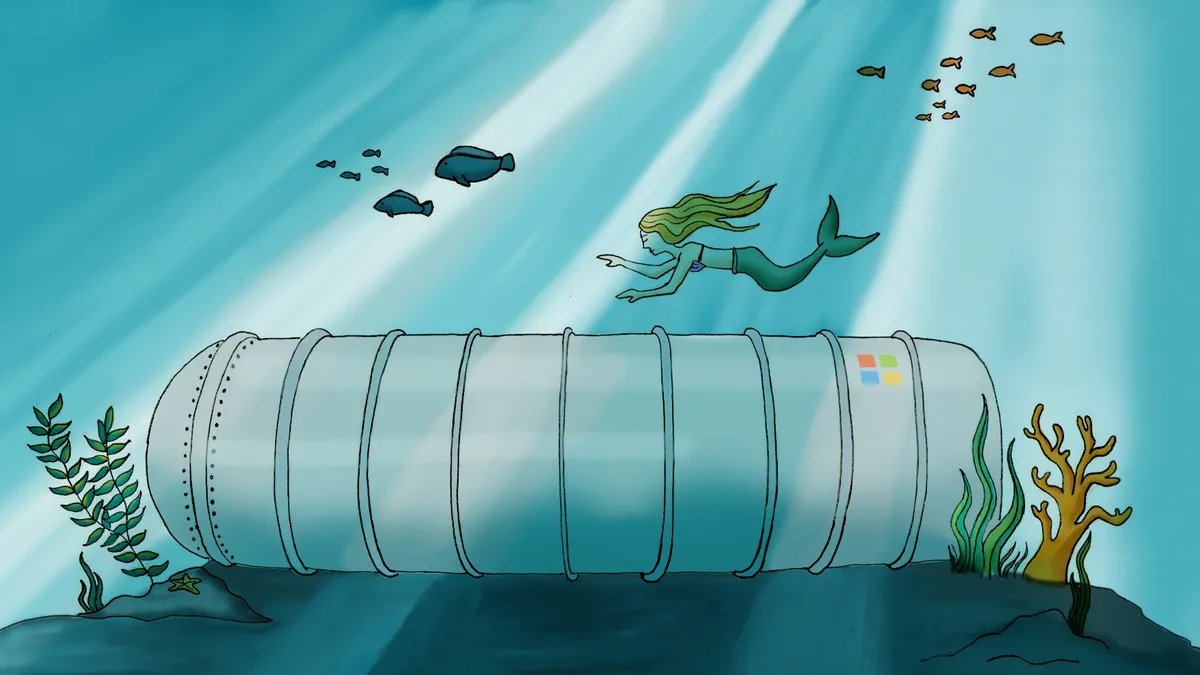

The ocean floor was once reserved for bottom feeders and the occasional shipwreck. But now Microsoft is working to make it hospitable for IT too.

But before that can happen humans must conquer development in the ocean, of which there is plenty of untouched water. The ocean's vastness — and the search for an alternative solution for saving energy — was one of the founding reasons for Project Natick: a collision of IT and submarine technologies.

The cloud serves as a virtual alternative for organizations' on-premise data centers, so cloud vendors take on the onus of data center maintenance and functionality. As more companies adopt the cloud, vendors like Microsoft are searching for solutions to reduce the energy servers require. One such solution just so happens to be at the bottom of the ocean.

Land-based servers eat up energy and time dedicated to cooling and maintenance. In hopes of eliminating the stressors of external disrupters, Microsoft's research into undersea data centers continues with the latest phase of Project Natick.

For Ben Cutler, project manager of Microsoft's Project Natick, the notion of submerging computers into water left him saying, "I'm not going to that group." But once the idea was viewed from the eyes of an engineer, the idea of sunken computers, although nuanced, is not crazy, he told CIO Dive in an interview.

Project Natick was joined in a "marriage" of two industries: IT and marine technologies, one of which Microsoft has mastered. The company turned to Naval Group, a builder of submarines with the ability to offer solutions for renewable energy, to supplement Microsoft's understanding of IT.

The vessel was modeled after designs of Naval Group's underwater constructions, according to Microsoft. It is anchored by a triangular base with a "dual air-water system" to keep the internal data center cool, just as it would need to be on land.

Microsoft takes on conservation

Data centers are inherently unfriendly to energy conservation, but, the "idea that I could build a data center and collocate it with renewable energy is actually not a new one," said Cutler. It is however an "appealing" one.

Project Natick is more than just a nod to the Massachusetts town — it's Microsoft's pursuit of a renewable energy frontier. The U.S., in contrast to "space constrained" Europe, doesn't use offshore wind as an alternate form of energy that much. But that is where designers behind the research project drew inspiration.

Tidal energy is generated by the current of the ocean and works like wind turbines but while "sitting on the seabed as the water moves back and forth," said Cutler. Energy from waves is still "very nascent" but this type of research could embolden its progress.

Project Natick is an experiment in an otherwise unexplored space for IT. "This is a potentially innovative approach for the sector," Gary Cook, senior corporate campaigner on the Climate & Energy Campaign, at Greenpeace, told CIO Dive. Energy demanded by land-based servers could be cut by harnessing ocean cooling, he said.

However, because the cloud market is expanding at such a rapid pace, it is "uncertain" if Microsoft can maintain the "securing of a renewable electricity supply in many markets," according to Cook.

Other Silicon Valley companies are also working to reduce their carbon footprint. As the cloud consumes the storage market, cloud vendors have to come up with creative solutions to cut energy consumption.

About 40% of Amazon Web Services' global infrastructure's power consumption was generated by renewable energy sources by the end of 2016, according to the company. AWS has 10 solar and wind farms that produce about 2.6 million megawatt-hours of energy to power its data centers in Ohio and the Northern Virginia area.

Google tapped into machine learning to understand how its data centers could achieve maximum efficiency. The early stages of the model told engineers the best way to achieve complete energy conservation was to "shut down the entire facility," according to the company. Engineers had to teach the algorithm to be "a responsible adult."

Now the models helped Google reduce its energy consumption by 40%, and wind and solar projects are also in use.

As for the immediate environmental impact of a submerged data center, the data thus far shows minimal differences, according to Cutler. The water temperature essentially regulates itself surrounding the vessel because of the moving currents.

Imagine pouring a cup of warm water into a river, Cutler said. The water's temperature might slightly rise in the exact location of the pour, but it dissipates with the current. Research from the test off California's coast, with the Leona Philpot, found that temperatures differed by a few thousandths of a degree.

Sea life is also a factor, though Cutler admitted he was more afraid of what California's sea lion population would do to the vessel than the other way around. But the researchers were still wary of sounds emitted from the servers because mammals have a particular sensitivity to the sense.

The researchers ultimately found that the mass population of snapping shrimp drowned out any noise from the servers.

But nonetheless, Microsoft often pursues causes outside of its IT wheelhouse. "If you look at Microsoft today, we've got a CEO (Satya Nadella) who really, I think, genuinely has this view that doing good for the world will end up being good for the company," said Cutler. Microsoft is chipping away at the separation of industry and environmental protections.

At the end of the day "we are citizens of the world" and if a company can work to reduce its carbon footprint, it should, said Cutler.

Science behind the submarine data center

Traditional data centers are sensitive to environmental intrusions and even with adequate maintenance, things can be thrown off. Part of protecting servers from any disruption is preventing damage to its metal parts.

Researchers behind Project Natick removed the oxygen and most of the vapor within the vessel, creating a dry, nitrogen-rich environment. This lessens the possibility of corrosion of the "metal-to-metal connection" most servers have, said Cutler.

Even with gold plated connectors, imperfections are inevitable and moisture can seep in. Traditionally, analysis of connectors is done when computers aren't performing as they should. Upon inspection, receding the connectors is done and you "scrape off" the existing bit of corrosion, he said. But Project Natick avoids that process altogether, if things go as planned.

As of right now it is unknown when Project Natick will graduate from research to a commercial offering. The second phase of Project Natick was released on June 1, off the coast of Scotland, and is set to be underwater for a year. During that time Microsoft will collect data and assess the integrity of the infrastructure and impacts on its surrounding environment.

But the research project could also offer insights for land data centers. A comparable land data center to the submerged one can be used to compare the benefits of a "lights out operation," meaning a data center sealed from human interruption, or otherwise.

Enabling data center access to a broader population

One of the appeals of an immersed data center is its detachment to the power grid. Power grid-ridden data centers cause an uptick in power use because the power has to be systematically increased to accommodate sending it across "high tension lines" at long distances. That long distance transmission costs about 5% loss of electricity, according to Cutler.

Data centers in the U.S. are forecast to consume about 73 billion kilowatt hours in 2020, according to the U.S. Data Center Energy Usage Report from 2016. And the increase in "hyperscale" data centers are setting the tone for future power demand.

If energy can be made and used locally, it could cut a data center's power utilization effectiveness (PUE) that traditionally rely on batteries or generators. Instead, tidal energy gives a data center's PUEs more predictable reliability with the pattern of currents. Power then becomes "integrated with its own storage system," according to Cutler.

PUEs are the total data center power usage divided by the power of the servers to find what the overhead is. For example, a PUE of three indicates that for every one wattage of power, there are two watts used for something else. Over time, engineers were able to decrease PUEs — ranging between four or five to about two — using architectures designed to control the flow of air.

Collocated renewable energy frees a data center needing backup solutions and can instead have a surplus of energy, therefore simplifying operations.

But modern data centers are "construction projects" which make them unpredictable, said Cutler. Natick, however, is manufactured and handled as such which leads to rapid deployment and a more consistent experience.

There are just too many factors, like terrain, climate, power supply and people, but Natick introduces a truly sealed environment so "all those problems kind of go away," said Cutler.